by Hamish Chamberlayne – Head of Global Sustainable Equities and Richard Clode – technology equities portfolio manager

Graphics processing units (GPUs) are engines for the future of computing. Designed for parallel processing, a GPU is a specialised electronic circuit card that works alongside the brain of the computer, the central processing unit (CPU), to enhance the performance of computing. If you are reading this article on an electronic device, it is likely that a GPU is powering your screen display.

While GPUs were initially used in computer graphics and image processing for personal and business computing, the use case has significantly broadened as technology has evolved. Moore’s Law – the observation that the number of transistors in an integrated circuit doubles every two years while the cost of computing halves – has democratised the use of GPUs by making them cheaper and more readily available, thus transforming the adoption of GPUs across multiple industries. Today, high performance GPUs are central to many different technologies and will form the basis of the next generation of computing platforms.

The power of parallelism: applications in AI and deep learning

GPUs are built for the purpose of running large numbers of workloads at the same time to increase computing efficiency and enhance the overall performance of computing. While this is beneficial for end markets such as gamers who enjoy high-quality, real time computer graphics, it can also be applied to more serious use cases.

The ability of GPUs to process large blocks of data in parallel makes them optimal for training artificial intelligence (AI) and deep learning models which require intense parallel processing of hundreds of thousands of neural networks simultaneously. The application of deep learning is broad, from enabling web services to improving autonomous vehicles and medical research.

Digitalisation driving decarbonisation

While GPUs have already had a positive impact on real-world challenges, the opportunity to shape innovation across industries is yet to be fully explored. The application of AI and deep learning are essential to create a successful digital future, and this is already becoming reality as the trend of digitalisation grows. It is important to recognise that this trend is impacting all industries and, as such, efficient and powerful technological capabilities are essential as businesses begin their digital transformations.

With regards to its impact, we believe that digitalisation is playing a positive role in economic development and social empowerment, and we also see a close alignment between digitalisation and decarbonisation. Digitalisation ‘cracks’ open the shell of traditionally-analog functions, promoting data transparency and equipping businesses and individuals with the appropriate knowledge to make well-informed decisions about consumption, production and reduction based on their current behaviours. For example, ambitious goals to lower carbon emissions and achieve climate targets can benefit from data extraction, transformation and analysis to determine the best course of action.

We have already begun to see digitalisation penetrate and advance traditional practices – manufacturers integrate technology into industrial processes to optimise production, building managers use smart technology and data analytics to ensure energy is consumed only when necessary, and intelligent transportation systems analyse traffic data to reduce congestion, fuel use and emissions. Elsewhere, many digital services have started to replace traditional methods that often require more intense energy consumption, such as online meetings to reduce business travel, which lessens carbon footprints globally.

Does powering technology come at a cost?

One of the critical challenges with a global digital transformation is the significant energy needed for high-performance computing. It is important for us to understand the true energy cost demanded from technology, and what can be done to lower overall energy consumption.

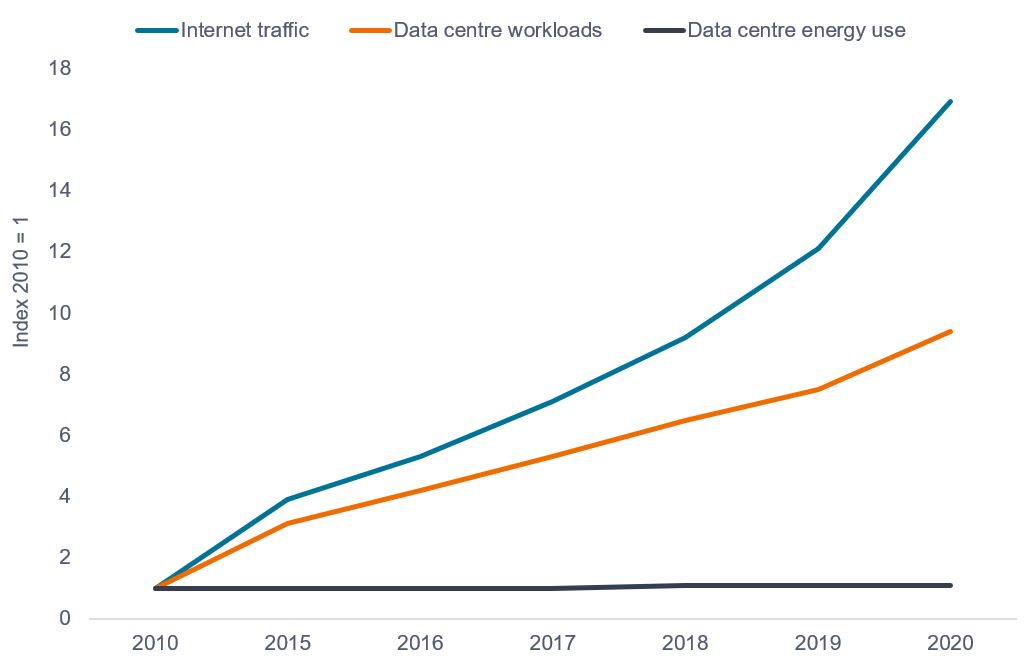

There is a misconception that an increase in data centre usage equates to increased energy demand. According to the International Energy Agency (IEA), data centre energy use has actually remained flat despite an explosion of data centre demand and internet traffic – chart 1. This disparity is driven by efficient systems and processes. GPUs minimise the significant energy strain of high-performance computing on data centres. For AI applications, some GPUs can be up to 42 times more energy-efficient than traditional CPUs. Meanwhile, some hyperscale GPU-based data centres use only 2% of the rack space, making them more space-efficient compared to CPU-based systems. 2 In short, GPUs pack a punch. By enabling smarter use of energy, they contribute in part to keeping energy use to a minimum.

Chart 1: data centre energy use remains flat

Source: IEA, Global trends in internet traffic, data centres workloads and data centre energy use, 2010-2020, IEA, Paris https://www.iea.org/data-and-statistics/charts/global-trends-in-internet-traffic-data-centres-workloads-and-data-centre-energy-use-2010-2020

The path to net zero

Like all industries, technology will have to do its part to address global climate change and reduce its own environmental footprint, with the goal of achieving net-zero emissions. In 2020, the International Energy Agency (IEA) released its annual Tracking Clean Energy Progress, which reports on the key energy technologies and sectors that are critical for slowing global warming. Of 46 sectors, the IEA named data centres and data transmission networks as one of only six sectors that were on track to meet its sustainable development scenario. However, the surge in global internet usage during COVID-19, driven by increased video and conference streaming, online gaming and social networking, saw this ranking slide to “more efforts needed” in 2021’s report.3

Despite this setback, we believe a focus on ongoing efficiency improvements in data centre infrastructure is integral to meet net-zero goals, reinforcing the role that GPUs play in creating a sustainably digitalised world.

Ethical implications behind AI

While there are many benefits to the broad use-case of AI, greater adoption of the technology brings about significant underlying ethical risks.

In cases where AI is cheaper, faster and smarter than human labour, it can be used to replace existing workforces; chatbots have replaced call centre staff due to AI’s natural language processing ability, many factory workers have been substituted for automated factory machinery, and robo-taxis could soon replace human drivers. We recognise the impact that this could have on employment, especially in concentrated areas, and believe it is vital to consider the long-term consequences on society in these instances. However, we also see a benefit in relinquishing certain monotonous job roles to AI. By freeing up human capital, it offers the opportunity for individuals to partake in more fulfilling roles that are not possible for AI – personal training, creative design and teaching. In doing so, we believe society could be enriched for the better.

It is also important to acknowledge the potential sinister uses that the technology could be used for. The US government has recently acted to restrict the export of top-end GPU chips produced by nVIDIA to China in an attempt to prevent certain Chinese companies from purchasing GPUs to enable mass surveillance, notably in the case of the Uighur Muslims. We fully welcome any restrictions that intend to reduce potential ethical threats to society.

Some companies, including nVIDIA, have also employed ethical frameworks to implement ‘trustworthy AI’ principles within the company’s product ecosystem. We see great value in placing ethical principles at the core of product design and development to foster positive change and transparency in AI development.

Conclusion

Digitalisation is the cornerstone of our future. From humble beginnings, the GPU has evolved into one of the most critical facilitators of innovation and digital transformation for society. We also believe that the next generation of computing is essential to achieving global sustainability goals. When analysing individual companies, we believe that shifting to a low-carbon business model is a marker for long-term success, and we look to technology to enable this change.

Footnotes

1 Nvidia blog, ‘World Record-Setting DNA Sequencing Technique Helps Clinicians Rapidly Diagnose Critical Care Patients’, 2022

2 Nvidia, Corporate Social Responsibility Report, 2021

3 International Energy Agency, Tracking Clean Energy Progress Report, 2022

Definitions

Deep learning: involves feeding a computer system a lot of data, which it can use to make decisions about other data. This data is fed through neural networks – logical constructions which ask a series of binary true/false questions, or extract a numerical value, from all the data which pass through them, and classify it according to the answers received.